I have talked a bit about the dolly sentences method of kikitori or Japanese listening study. Or rather, general Japanese study with an emphasis on listening. Now we are going to look at the technicalities of how it is actually done.

I am using a Mac, and as we will see, there is a certain advantage in that, but for the most part the method will be similar for other operating systems.

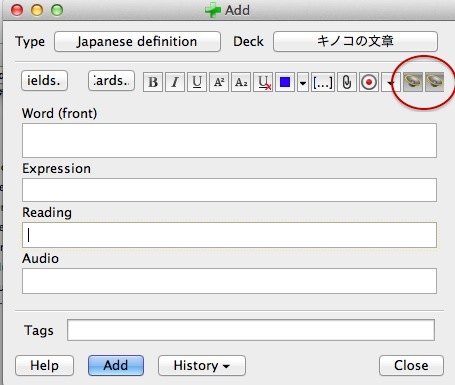

First, in your Anki you need to install an addon (Tools > Addons > Browse & install) – one or both of Awesome TTS and Google TTS. Once you have installed it/them, you will see one or two speaker icons added to the top bar of the add card window:

If you click one of these it will give a window like this.

If you click one of these it will give a window like this.

Here you can type or paste the text you want spoken and click the preview button. If you get silence, it is probably because you haven’t changed the language. The language drop-down must be Japanese for Google or Kyoko for OSX’s voice system (unless you are using an Apple device don’t worry about Kyoko).

Here you can type or paste the text you want spoken and click the preview button. If you get silence, it is probably because you haven’t changed the language. The language drop-down must be Japanese for Google or Kyoko for OSX’s voice system (unless you are using an Apple device don’t worry about Kyoko).

The text will be read back to you. You may need to make some changes. Sometimes the synthesizer will read kanji incorrectly. Kyoko – while in most respects the best consumer-level voice-synthesizer available – is particularly bad about this. She reads 人形 as ひとかたち, for example – particularly galling to a doll. If that happens just re-spell the word in kana. You may also need to add or delete commas to get the sentence read in a way that is clear and understandable.

When you are happy with the synthesis, just hit OK and the file will appear wherever your cursor was when you opened the box (I have an Audio field on my cards as you can see in the first screenshot).

This is really all there is to adding spoken sentences to Anki. For my method I have the audio file play on both the front and the back of the card.

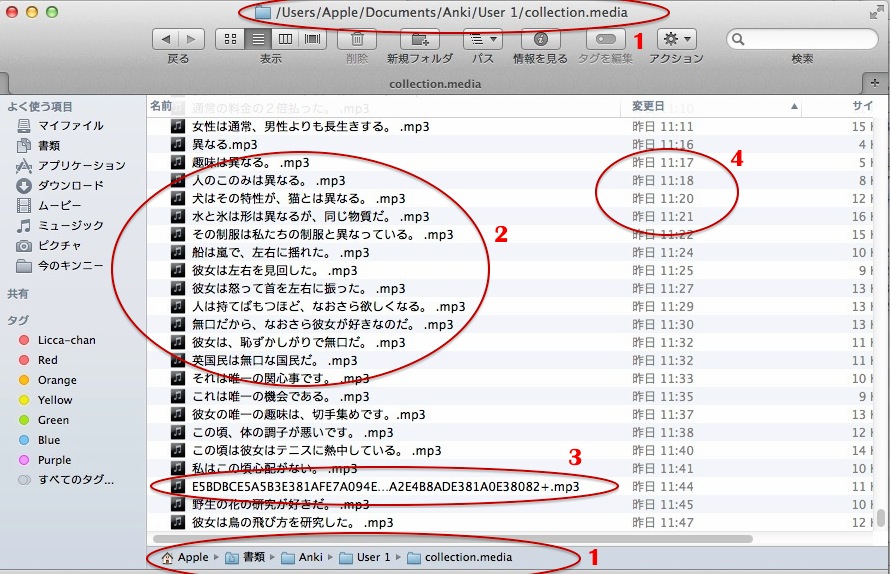

You can then harvest the sentences to put them on your MP3 player in accordance with the Dolly Sentences Method. It really is easier than you might think. First you need to find them, and they are in your Anki folder in a sub-folder called collection.media. Here it is (you can click the image to enlarge it):

1. (top and bottom) shows you the file-path on a Mac. It will be similar on Windows. Just search “collection.media” if you have trouble finding it.

2. shows you the actual sentences. They are small MP3 files. If you are using Mac OSX’s Kyoko voice, the title of the file is conveniently the sentence itself.

3. If you are using the Google voice synthesizer the title is a lengthy code. But don’t worry because:

4. You can always use the date of the file to show you what you have added recently.

What you will do is simply copy-drag all your recently-added sentences into a folder and add this to your MP3 player. It really is as simple as that.

Update: Google TTS no longer supports Anki, but Awesome TTS now gives access to the vastly superior Acapella TTS engine among others. This also has the advantage that you now only need one addon to choose between Acapella and Apple’s Kyoko voice if you have her. You can also now choose human-readable filenames for everything. The instructions in this tutorial still apply.

Here is a sample, complete with recommended 3-second break, to show how the sentences actually sound:

These sentences are spoken by Mac OSX’s Kyoko voice, which, apart from her problems with Kanji reading, is in my view the best Japanese voice synthesizer available. The third sentence is spoken by the Google synthesizer, so you can hear the difference.

I use about 95% Kyoko with the Google alternative for the minority of occasions when Kyoko really won’t read a sentence well (this also mixes up the speech a bit, which I think is good). Google’s synthesizer will be installed automatically when you install the Google TTS addon. If you are using an i-device (iPad, iPhone etc) you should be able to use Kyoko too, though I am not certain about this (please let me know in the comments if you find out).

Kyoko speaks well and naturally for the most part, and actually knows the difference in tone between many Japanese homophones. For example if you type 奇怪 kikai (strange, mysterious) and 機械 kikai (machine), Kyoko will pronounce them each with the correct syllable raised, which is what differentiates them in spoken Japanese.

If you end a sentence with a ? Kyoko will raise her tone into a question intonation very naturally. The Google synthesizer does not do this, neither is it aware of tone differences between homophones. On the other hand, type a ! and Kyoko ends the sentence with a funny noise, and she makes far more kanji errors than Google. Neither of these problems really matters (just avoid ! and re-spell mispronounced kanji in kana).

The Apple synthesizer is considerably ahead of Google’s alternative and yet is in some minor respects surprisingly unpolished. But if you have a device that supports her you should definitely use her.

So there you have the technical aspects of the Dolly Kikitori sentences method. If you have any questions or want to share your experiences, please use the comments section below. For the method itself, please go here.